About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

26th September 2024 04:30 PM

Linguistics Colloquium Speaker: Dr. Chris Collins to speak on "A Merge-Based Approach to Argument Structure"

The Department of Linguistics proudly presents Dr. Chris Collins, Professor at the New York University, who will give a talk titled "A Merge-Based Approach to Argument Structure".

This talk outlines the Merge-based approach to argument structure developed in Collins 2024 (updating, defending and extending Collins 2005). The predictions of the Merge-based theory of implicit arguments will be compared to the predictions made by non-Merge based theories.

Funded in part by the GPSAFC and Open to the Graduate Community.

Reference:

- Collins, Chris. 2024. Principles of Argument Structure: A Merge-Based Approach. MIT Press, Cambridge.

Location: Morrill Hall, 106 Cornell University Dept, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

27th September 2024 11:15 AM

C.Psyd talk: How do listeners integrate multiple sources of information across time during language processing?

The C.Psyd Seminar will feature the following Zoom talk by Dr. Wednesday Bushong, who is an Assistant Professor of Psychology and Cognitive & Linguistic Sciences at Wellesley College.

Her talk is titled: How do listeners integrate multiple sources of information across time during language processing?

Contact Dr. Marten van Schijndel for the zoom link, otherwise we'll meet in B07 to watch Dr. Bushong's talk on the big monitor.

Abstract:

Understanding spoken words requires listeners to integrate large amounts of linguistic information over time at multiple levels (phonetic, lexical, syntactic, etc.) There has been considerable debate about how semantic context affects word recognition, with preceding semantic context often viewed as a constraint on the hypothesis space of future words, and following semantic context as a mechanism for disambiguating previous input.

In this talk, I will present recent work from my lab and others’ in which it appears that human behavior resembles neither of these options; instead, converging evidence from behavioral, neural, and computational modeling work suggests that listeners optimally integrate auditory and semantic-contextual knowledge across time during spoken word recognition. This holds true even when such sources of information are separated by significant time delays (several words.)

These results have significant implications for psycholinguistic theories of spoken word recognition, which generally assume rapidly decaying representations of prior input and rarely consider information beyond the boundary of a single word.

Furthermore, I will argue that thinking of language processing as a cue integration problem can connect recent findings across other domains of language understanding (e.g., sentence processing.)

Location: B07 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

30th September 2024 12:20 PM

Phonetics Lab Meeting

We will discuss this Babel & Johnson paper (JASA - Journal of the Acoustical Society of America):

Abstract:

When a bilingual switches languages, do they switch their voice?

Using a conversational corpus of speech from early Cantonese-English bilinguals (n = 34), this paper examines the talker-specific acoustic signatures of bilingual voices. Following the psychoacoustic model of voice, 24 filter and source-based acoustic measurements are estimated.

The analysis summarizes mean differences for these dimensions and identifies the underlying structure of each talker's voice across languages with principal component analyses.

Canonical redundancy analyses demonstrate that while talkers vary in the degree to which they have the same voice across languages, all talkers show strong similarity with themselves, suggesting an individual's voice remains relatively constant across languages. Voice variability is sensitive to sample size, and we establish the required sample to settle on a consistent impression of one's voice.

These results have implications for human and machine voice recognition for bilinguals and monolinguals and speak to the substance of voice prototypes.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

2nd October 2024 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

No PhonDAWG this week - we instead encourage everyone to attend Dr. Molly Babel's Friday informal Phonetics Lab talk.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

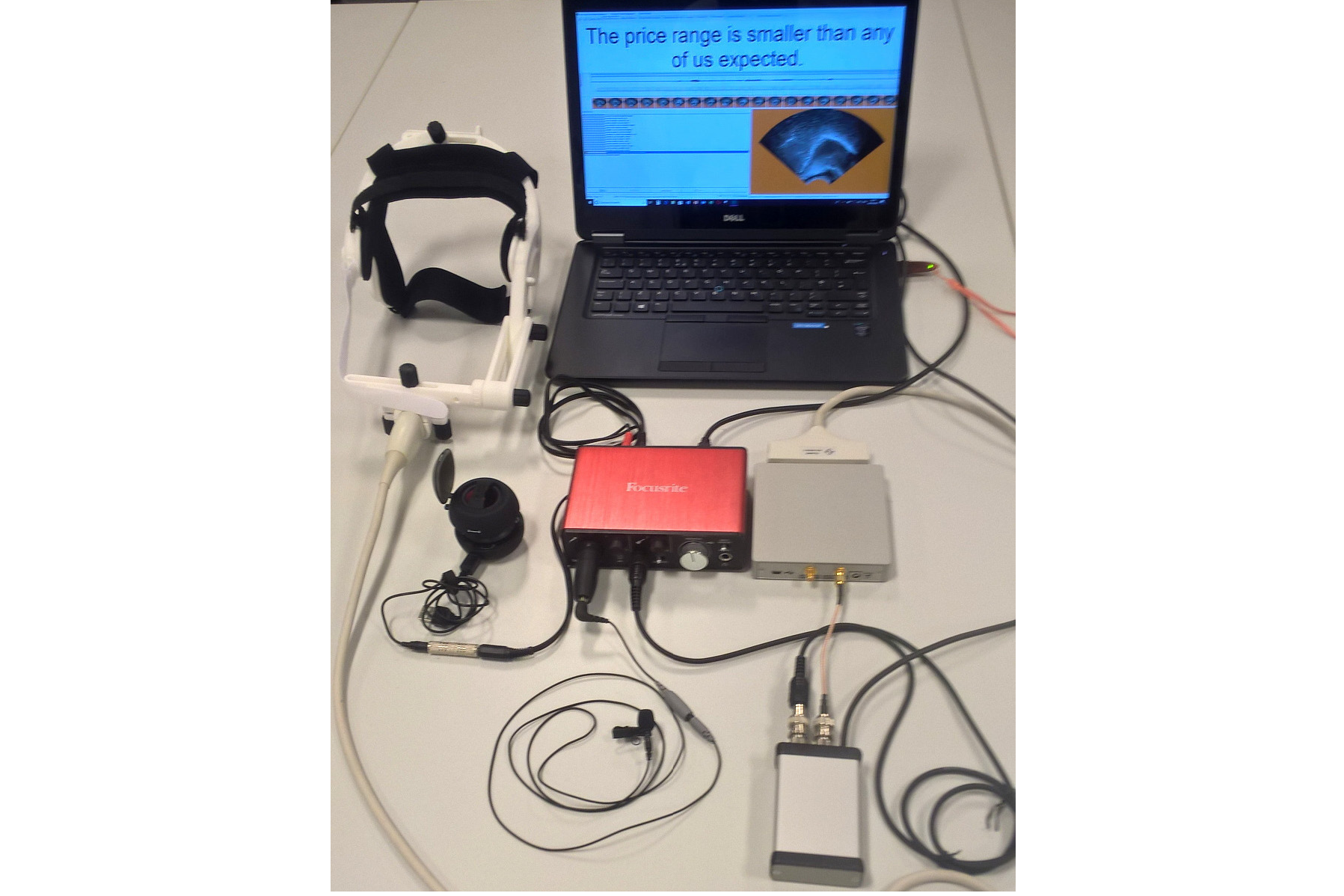

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.