About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

12th November 2020 12:00 PM

Webinar: Exploring Nature Through Sound and Music

Webinar: Exploring Nature Through Sound and Music

Science and music combine to reveal the stories of species from around the globe! Join staff from the Cornell Lab of Ornithology’s Center for Conservation Bioacoustics to explore how and why animals make sound and how these sounds can be used for conservation.

Meet researchers working in mountains, rainforests, and oceans and hear their acoustic data transformed into live music by your host, the Lab's Ben Mirin (aka DJ Ecotone). There will be an opportunity for audience Q&A. The webinar is free, but Zoom registration is required.

Location: Webinar: Exploring Nature Through Sound and Music

12th November 2020 04:10 PM

Thursday, November 12, 2020 at 4:10pm - Linguistics Colloquium Speaker: Juliet Stanton of NYU - Rhythm is gradient

The Department of Linguistics proudly presents Assistant Professor Juliet Stanton from New York University as a speaker in our colloquium series.

Rhythm is gradient: evidence from -ative and -ization

The rhythmic constraints *Clash and *Lapse are commonly assumed to evaluate syllable-sized constituents: a sequence of two adjacent stressed syllables (óó) violates *Clash, while a sequence of two stressed syllables, separated by two stressless syllables (óooó), violates *Lapse (see e.g. Prince 1983, Gordon 2002 for *Clash; Green & Kenstowicz 1995, Gordon 2002 for *Lapse). In this talk I propose that *Clash and *Lapse can be evaluated gradiently: speakers calculate violations off of a phonetically realized output representation. The closer the two stressed syllables, the greater the violation of gradient *Clash; the further away the two stressed syllables, the greater the violation of gradient *Lapse.

Evidence for this claim comes from patterns of secondary stress in Am. English -ative and -ization: in both classes of forms, the inner suffix (-ate and -ize) is more likely to bear stress the further away it is from the rightmost stem stress. Time permitting, we will discuss other sources of evidence for gradient rhythm, including Am. English post-tonic syncope (Hooper 1978) and secondary stress in Russian compounds (Gouskova & Roon 2013).

Location: Event Information13th November 2020 12:40 PM

Friday, Nov 13, 12:40PM: Phonetics Lab Meeting - Presentation by Dr. Eli Marshall from Music

For the PLab meeting, Dr. Eli Marshall (Cornell Department of Music) will present some of his research.

Background and optional reading for the talk:

Below is a link to Temperley, et al; some of my talk constitutes a reply. In particular, Example 3 (in Part 1), is a good example of pitch tracking and layback using the pYin algorithm via the software Tony (I'll offer a soft critique of this method). In the text, Part 3 is also worth a look.

Temperley, Ren, and Duan. 2017. "Mediant Mixture and “Blue Notes” in Rock: An Exploratory Study." https://mtosmt.org/issues/mto.17.23.1/mto.17.23.1.temperley.html

The Tony pitch-tracking software used by Temperley: https://code.soundsoftware.ac.uk/projects/tony

I'll just add that knowledge of music terminology and notation is unnecessary for this informal talk which is really a prospective line of research. I'd most look forward to comments from you and the group as I highlight questions encountered when analyzing vocal music recordings through phonetics-style segmentation, pitch/f0 measurement, and modeling.

Location:13th November 2020 08:00 PM

Sam Tilsen to speak at KEIO X ICU LINC talk series

Dr. Sam Tilsen will give a talk on "The emergence of non-local phonological patterns in the Selection-coordination-intention framework" at the 10th annual ICU Linguistics Colloquium (KEIO X ICU LINC). The conference is sponsored by ICU (the International Christian University of Japan), in collaboration with Keio University.

Dr. Tilsen's talk is scheduled from Friday, November 13, 10AM to Noon (JST), 8PM-10PM (EST).

Location: KEIO X ICU LINC

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

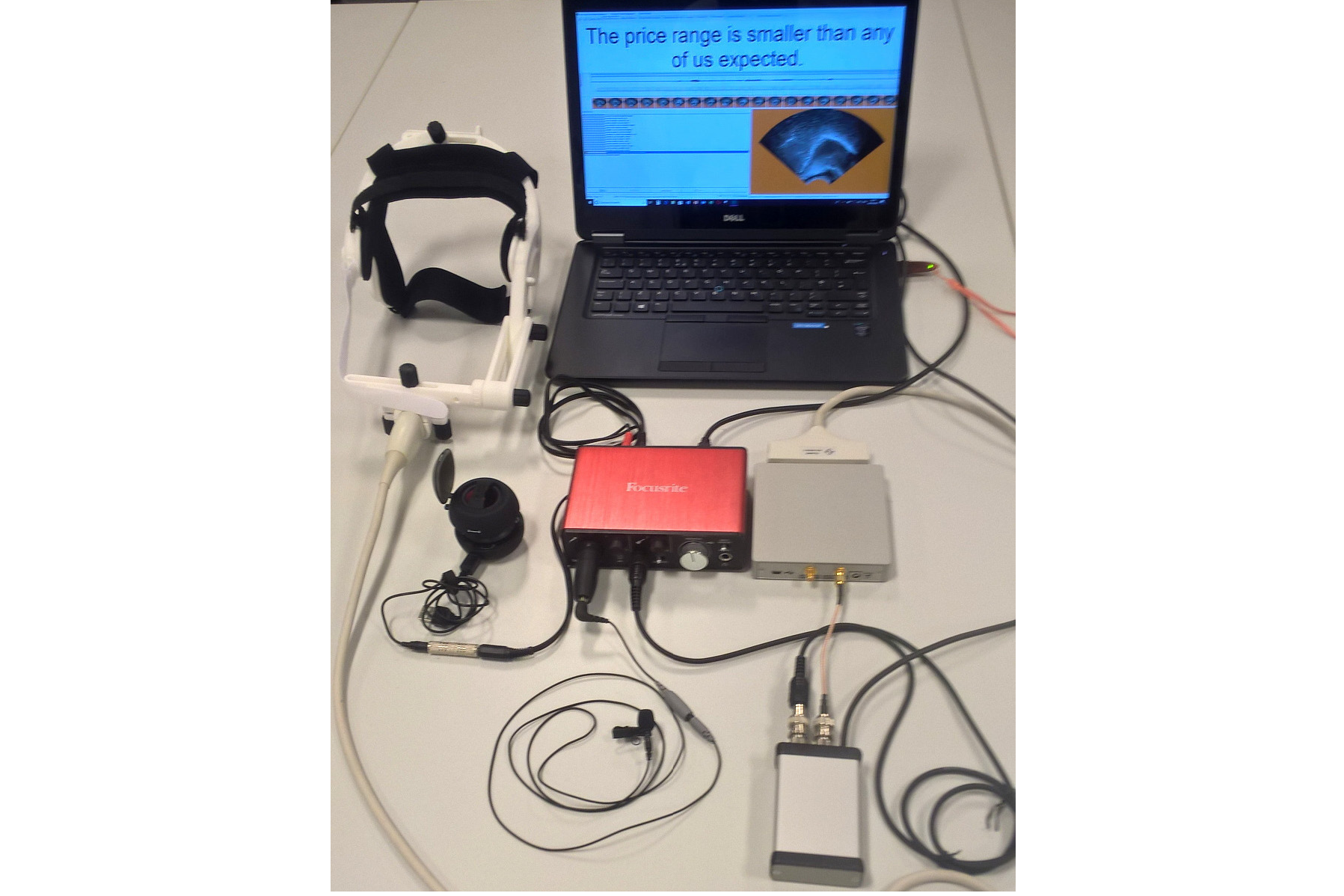

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.