About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

17th November 2023 12:20 PM

Phonetics Lab Meeting

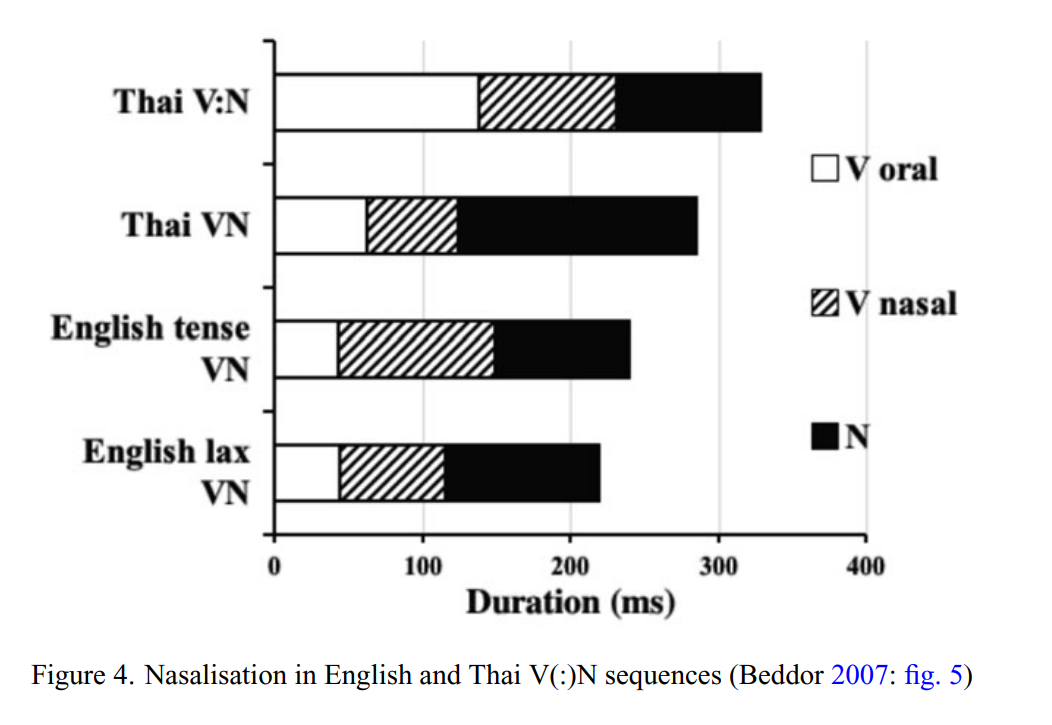

We will continue our discussion of Kramer's paper on vowel nasalisation:

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

27th November 2023 04:30 PM

Linguistics Colloquium Speaker: Anna Bugaeva to speak on Appositive Possession in Ainu and Indigenous American Languages

The Department of Linguistics proudly presents Dr. Anna Bugaeva, Associate Professor at Tokyo University of Science.

Dr. Bugaeva will speak on "Appositive Possession in Ainu and Indigenous American Languages: Inherited Feature or Rarum?".

Abstract:

This talk is based on Bugaeva et al (2022) and draws from Bugaeva's ongoing collaboration with Johanna Nichols aiming to find out with what American linguistic populations Ainu matches best.

Ainu is probably a good match for northwestern North America as well as with the loose areal grouping known as Paleosiberian, but we need to verify this supposition using quantitative typology.

Appositive possession is one shared typological property proposed by Nichols; this talk presents the Ainu construction in detail, and then examines its worldwide and North American distribution.

I then examine Nichol’s current hypothesis whereby North America is settled in three distinct waves: coastal entries ~25,000 and ~15,000 years ago and inland entry from ~15,000 years ago.

Location: 165 McGraw Hall, 141 Central Ave, Ithaca, NY 14850

29th November 2023 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

Yao will discuss her Q-paper project.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

30th November 2023 04:30 PM

Linguistics Colloquium Speaker: Amalia Skilton will discuss Anaphoric Demonstratives

The Cornell Linguistics Department proudly presents Linguistics Colloquium Speaker and Cornell Klarman Fellow Dr. Amalia Skilton, who will give a talk titled:

"Anaphoric demonstratives occur with fewer and different pointing gestures than exophoric demonstratives"

Abstract:

Demonstratives such as this/that and here/there have a close relationship with gesture: when people produce these words, they are very likely to point. But not every demonstrative occurs with a gesture, and not every pointing gesture displays the same articulatory form.

Thus, in this talk I explore the lexical and information-structural factors that affect the co-organization of demonstratives and pointing. My data comes from speakers of Ticuna, an Indigenous Amazonian language isolate which displays six place-referring demonstratives.

I show that four of the Ticuna place-referring demonstratives are always exophoric (i.e., pick out a referent from the physical surroundings) while two are primarily anaphoric (picking out a referent from the preceding discourse), and I argue that this contrast in phoric type overlaps with, but is distinct from, the pragmatic contrast between new and previously mentioned referents.

Looking to a video corpus of landscape description interviews, I then examine how phoric type and new vs. mentioned status affect the participants' use of pointing gestures accompanying demonstratives. In contrast to claims in the pragmatics literature, Ticuna interview participants pointed with a substantial minority of anaphoric demonstratives.

However, they pointed more often with exophoric demonstratives and with demonstratives -- whether exophoric or anaphoric -- that introduced new referents. Participants were also more likely to use index-finger handshapes with exophoric demonstratives, and to use full arm extension with demonstratives introducing new referents.

These findings indicate that the lexical and pragmatic properties of demonstratives influence, but do not deterministically control, the rate and form of co-demonstrative gesture.

Bio:

Amalia studies language use in interaction across cultures and the lifespan. Her research interests include first language acquisition, pragmatics, and language documentation. All of her research is based on her own fieldwork – she has conducted over 26 months of fieldwork in the Amazon Basin with speakers of two Indigenous languages, Ticuna (isolate) and Máíhɨ̃ki (Tukanoan).

As of July 2021, Amalia became a Klarman Fellow in the Department of Linguistics at Cornell University. Previously, she was an NSF SBE Postdoctoral Research Fellow, affiliated with the Department of Linguistics at UT Austin and the Language Development Department at the Max Planck Institute for Psycholinguistics, Nijmegen. Amalia received her PhD from Berkeley Linguistics in 2019.

In her current postdoctoral work, Amalia studies language acquisition and pragmatic development in young children (aged 1-7 years) learning Ticuna. Specifically, she asks how children acquire demonstrative words like this/that and here/there; how they co-organize these words with visual behaviors, such as pointing and gaze; and how demonstrative use varies cross-linguistically. Read more about Amalia's research with Ticuna speakers on her Research web page.

Location: 106 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

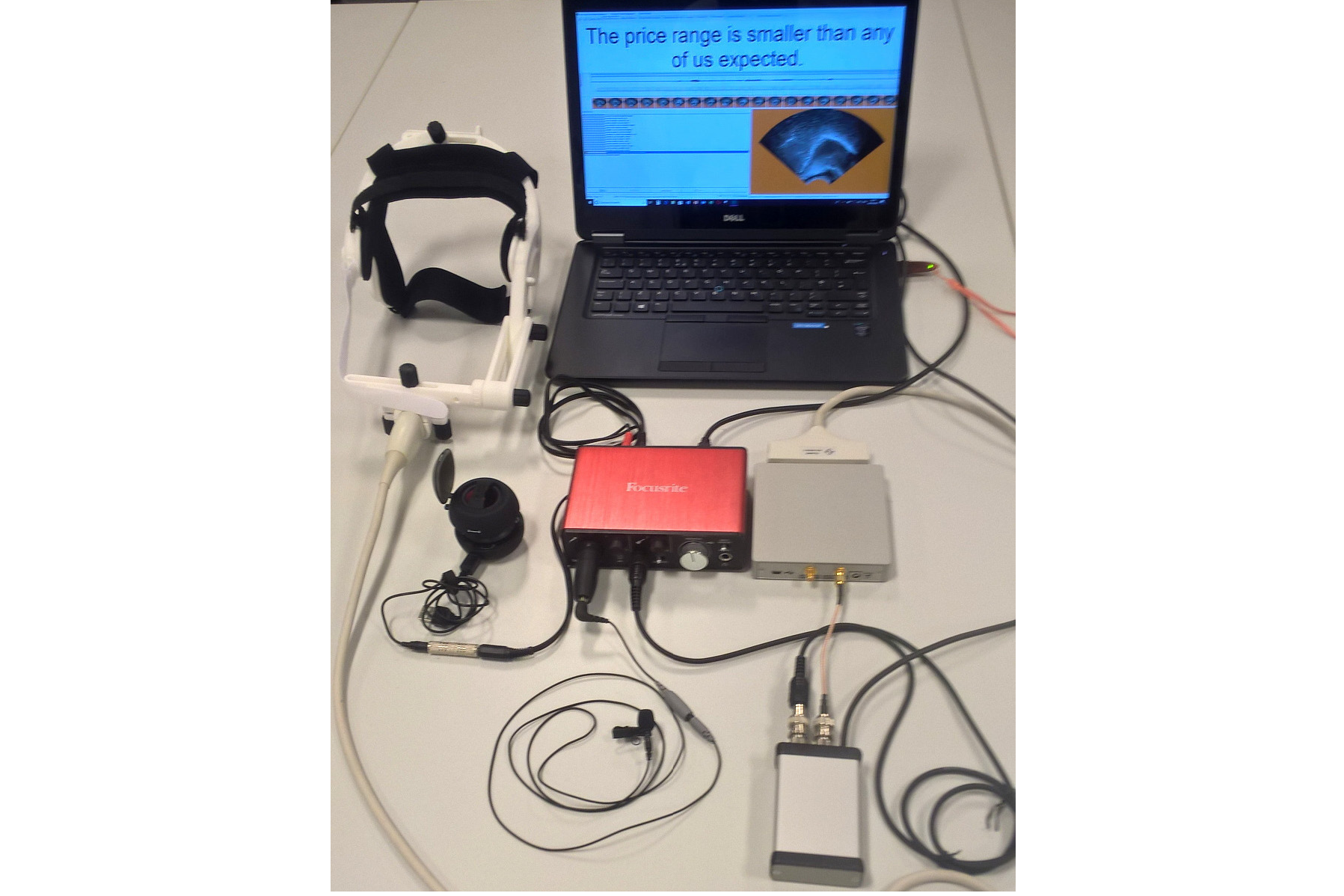

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.