About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

7th February 2024 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

Fengyue will present her forced alignment work.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

8th February 2024 04:30 PM

Linguistics Colloquium And ASL Linguistics Lecture Series Speaker: Cindy Office to speak on Acculturating into Deaf Communities as Adults

The Cornell Linguistics Department proudly presents Linguistics Colloquium and ASL Linguistics Lecture Series Speaker Dr. Cindy Officer, Senior Lecturer at the National Technical Institute for the Deaf, Rochester Institute of Technology..

Dr. Officer's talk is titled: Acculturating into Deaf Communities as Adults

ASL/English interpretation will be provided.

Abstract:

Dr. Officer will share her phenomenological study on the lived experiences of Deaf and Hard of Hearing adults with hearing loss who, in adulthood, acculturated into Deaf communities that use ASL. Officer will discuss a structure that revealed acculturation started when the participants interacted with other members of the Deaf community while learning some signs.

For ten participants, the desire to access communication and to connect with others like themselves eventually made acculturation an intrinsic investment. Acculturation stabilized when the participants were able to adeptly moderate interaction within and outside a Deaf community.

A descriptive phenomenological structure revealed seven essential constituents for acculturation:

- (a) DECODE-VISUAL,

- (b) GHOST,

- (c) UPROOT,

- (d) CONFUSE DEAF ME,

- (e) PERCEIVE SIMILAR,

- (f) LONG ACCESS,

- (g) MANAGE DEAF AND HEARING SPHERES.

Each essential constituent will be described as a composite and within a structure.

Bio:

Cindy Officer is a senior lecturer who teaches writing to deaf and hard of hearing students at the National Technical Institute of the Deaf at RIT. She comes from a family with many members who have some form of hearing loss.

In a broad sense, she has lived in a geographically defined Deaf community for more than 36 years. For added context, she spent these years at Gallaudet University - a liberal arts college for deaf and hard of hearing people - first as a student then as an educator and administrator.

She frequently wondered why some adults with a hearing loss found their way to a Deaf community while others did not, akin to Barbie wondering why some women in the Real World did not ascribe to the ways of Barbieland. Her dissertation work identifies a foundation that an adult with a hearing loss may need to transition.

Cindy holds a doctorate in postsecondary and adult education and a master’s in administration. When not teaching, Cindy enjoys reading, playing card and board games, traveling, and spending time with her family.

Location: 106 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

9th February 2024 01:00 PM

Dr. Edith Aldridge to speak on Indigenous (Austronesian) Language Endangerment and Revitalization in Taiwan

The Cornell East Asia Program & the Cornell Department of Linguistics proudly present Dr. Edith Aldridge, Research Fellow at Academia Sinica, Taiwan.

Dr. Aldridge will give a talk on Indigenous (Austronesian) Language Endangerment and Revitalization in Taiwan, with an introduction by Dr. John Whitman of Cornell's Department of Linguistics

Abstract:

Taiwan is the homeland of the Austronesian language family, speakers of Proto-Austronesian having migrated there from southeastern China roughly 6,000 years ago before proceeding to populate the Philippines, Indonesia/Malaysia, Madagascar, and the Pacific islands.

As many as twenty distinct languages were spoken in Taiwan at the beginning of foreign contact in the 17th century. Now a third of these are extinct, and the rest are endangered.

The first of these to decline were languages spoken in lowland areas in contact first with the small Dutch presence in southern Taiwan in the mid-17th century and subsequently with waves of Chinese migration in the 18th and 19th centuries. Intensive contact with highland Austronesians began with Japanese colonization during the first half the 20th century and continued under the Nationalist government from 1945 until the lifting of martial law in 1987.

In 2001, the government inaugurated a revitalization program with the hope of invigorating the by then already endangered Austronesian languages, for example by introducing ethnic language education into local school curriculums.

This presentation sketches the history of foreign contact, government language policies (particularly in the 20th century), revitalization efforts, and some outcomes of these policies and programs.

Bio:

Dr. Aldridge is a Research Fellow at the Institute of Linguistics, Academia Sinica in Taiwan. Her research focuses mainly on comparative and diachronic syntax, with language concentrations in Austronesian, Chinese, and Japanese.

Location: 110 White Hall, 123 Central Ave., Ithaca, NY 14850, USA

12th February 2024 12:20 PM

Phonetics Lab Meeting

Chloe will lead a discussion of Articulatory and acoustic studies on domain-initial strengthening in Korean, by Taehong Cho and Patricia A. Keating - make sure you have read it.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

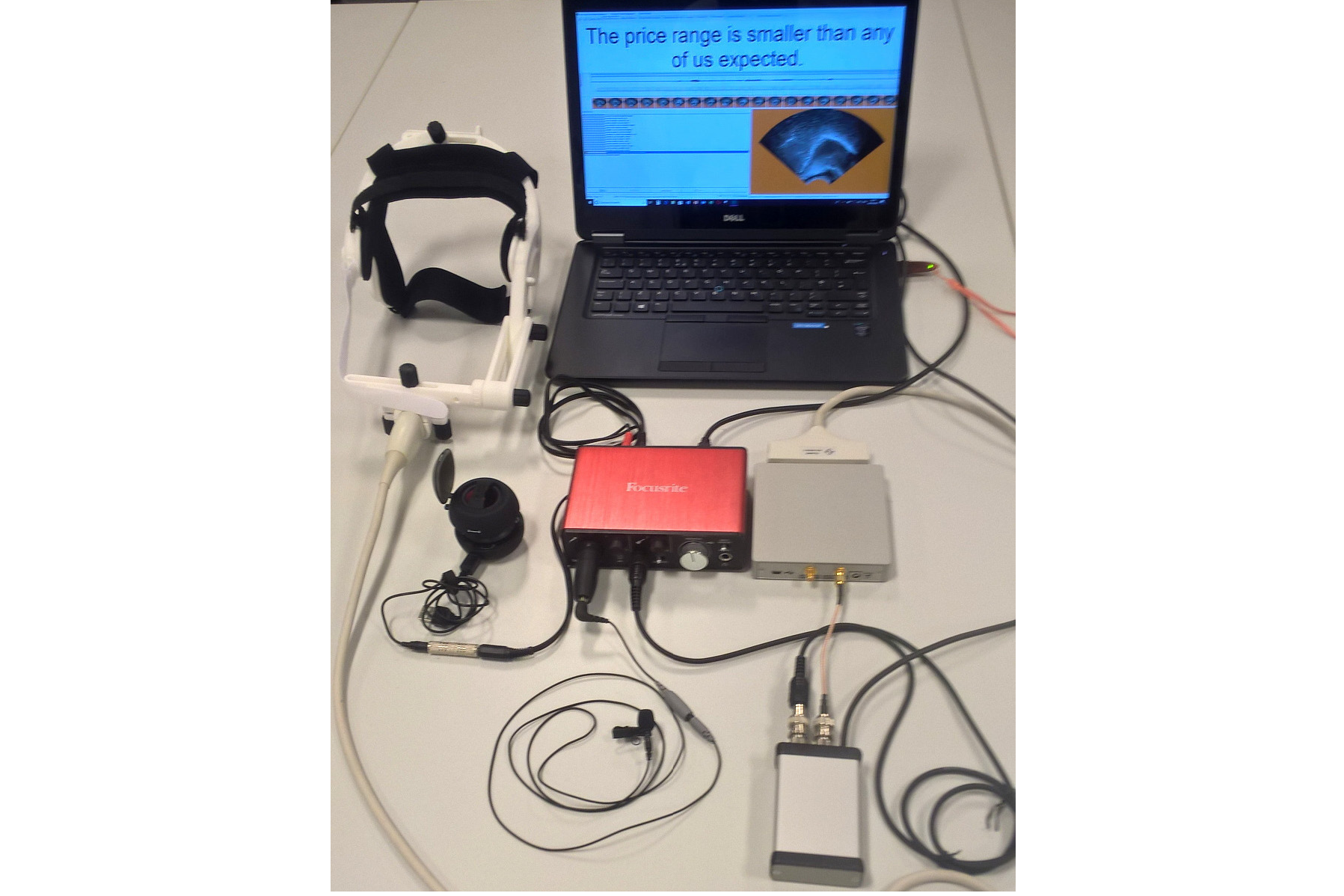

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

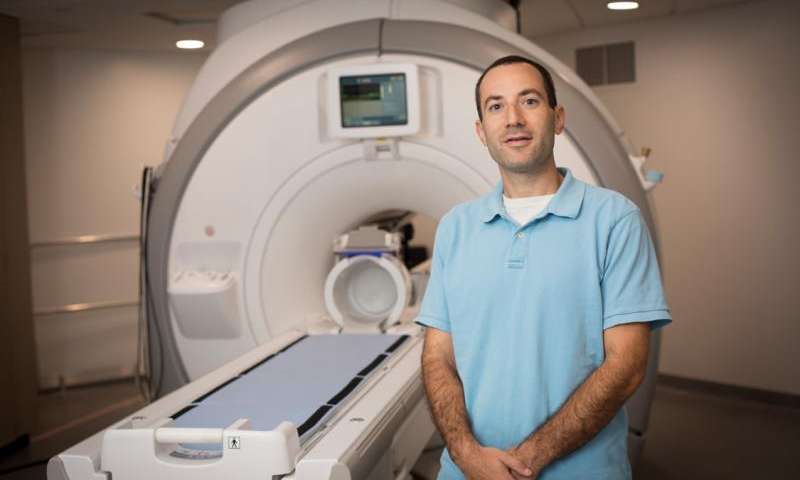

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.