About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

28th February 2024 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

Sam & Jeremy will discuss statistical model evaluation & will demonstrate various ideas using RStudio and a dataset of penguin anatomy measurements.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

4th March 2024 12:20 PM

Phonetics Lab Meeting

Annabelle will lead a discussion of this paper:

What it means to be phonetic or phonological : The case of Romanian devoiced nasals - by Benjamin V. Tucker and Natasha Warner, Phonology 27 (2010) 289-324

Abstract

Abstract phonological patterns and detailed phonetic patterns can combine to produce unusual acoustic results, but criteria for what aspects of a pattern are phonetic and what aspects are phonological are often disputed. Early literature on Romanian makes mention of nasal devoicing in word-final clusters (e. g. in /basm/ 'fairy-tale').

Using acoustic, aerodynamic and ultrasound data, the current work investigates how syllable structure, prosodie boundaries, phonetic paradigm uniformity and assimilation influence Romanian nasal devoicing. It provides instrumental phonetic documentation of devoiced nasals, a phenomenon that has not been widely studied experimentally, in a phonetically underdocumented language.

We argue that sound patterns should not be separated into phonetics and phonology as two distinct systems, but neither should they all be grouped together as a single, undifferentiated system. Instead, we argue for viewing the distinction between phonetics and phonology as a largely continuous multidimensional space, within which sound patterns, including Romanian nasal devoicing, fall.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

6th March 2024 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

We'll watch this video by Dr. Jean-Luc Duomont titled: Creating effective slides: Design, Construction, and Use in Science. This video will help you design presentations that listeners will understand and remember.

Dr. Duomont is an expert in the art of effective scientific communication, and through his company Principiae he regularly consults with companies and universities to improve research communication skills.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

7th March 2024 04:30 PM

Linguistics Colloquium Speaker Dr.Matthew Faytak to speak on Piaroa nasal harmony aerodynamics

The Cornell Linguistics Department proudly presents Linguistics Colloquium Speaker Dr. Matthew Faytak, Assistant Professor at the University of Buffalo. Dr. Faytak will give a talk titled: The aerodynamics of nasal harmony in Piaroa

A light reception with the speaker will follow in the Linguistics Lounge. Please contact the Linguistics Department lingdept@cornell.edu at least 72 hours in advance of the event for any special arrangements you may require in order to attend this event.

Abstract:

The phonetics of nasality have mainly been studied in a convenience sample of languages in the Global North that exhibit binary nasal contrasts.The study of nasal phenomena in Amazonian languages is a crucial counterweight to this bias, but has been hampered by its reliance on impressionistic transcriptions of various analysts, which often disagree substantially in their details. In particular, our understanding of two phenomena, long-distance nasal harmony and subphonemic differences in timing of velum gestures in nasal(ized) segments, is undermined by ambiguities and variation present in much existing descriptive work.

This talk is part of a larger NSF-funded collaborative project on phonetics and phonological typology of nasality in lowland Amazonian languages, the major goal of which is to replace impressionistic generalizations about nasal phenomena with instrumental observations. To this end, a team of experienced field linguists has collected aerodynamic data (nasal and oral airflow) with speakers of twelve languages from several Amazonian language families. After an overview of project sites, collaborators, and cross-site methods, I turn to ongoing analysis of a data set collected by Jorge Rosés Labrada on Piaroa (ISO 639-3 [pid], Sáliban) with 10 speakers from the community of Babel (Amazonas State, Venezuela). Piaroa is thought to have long-distance nasal harmony, but prior analyses fail to reach any consensus on how far nasality spreads leftward from a nasal vowel trigger; whether voiceless consonants block harmony; and whether voiceless consonants are transparent or undergo nasalization to some limited extent.

I discuss three of the group’s findings on Piaroa:

1) nasality spreads right to left from the trigger to the word edge, but diminishes in magnitude further from the trigger.

2) No consonants appear to block spread of nasalization from the trigger vowel.

3) Within nasal spans, voiceless consonants show increased nasal airflow in nasal harmony conditions, indicating partial nasalization at the start of the closure period. In other words, all Piaroa consonants appear to be at least partial undergoers of nasal harmony.

I suggest an account for (1-3) based on co-activation of conflicting velum gestures for the harmony span and individual consonants, and compare selected findings with data on two other project languages, Panãra (collected by Myriam Lapierre) and Maihɨk̃ i (collected by Lev Michael).

Bio:

Dr. Faytak is phonetician who specializes in articulatory phonetics, especially tongue ultrasound imaging. The theoretical focus of his research is on the connections between low-level biases in speech production and phonetic and phonological structure. He places a high priority on phonetic description and field data collection, particularly languages of Cameroon and China.

He received my PhD in Linguistics in 2018 from UC Berkeley, where his dissertation concerns within-speaker uniformity of articulatory strategies for fricative consonants and a subset of the vowels in Suzhou 苏州 Chinese.

Currently, he is an Assistant Professor in the University at Buffalo's Department of Linguistics. Most recently before this, he was a postdoctoral research fellow and lecturer in the Department of Linguistics at UCLA.

Location: 106 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

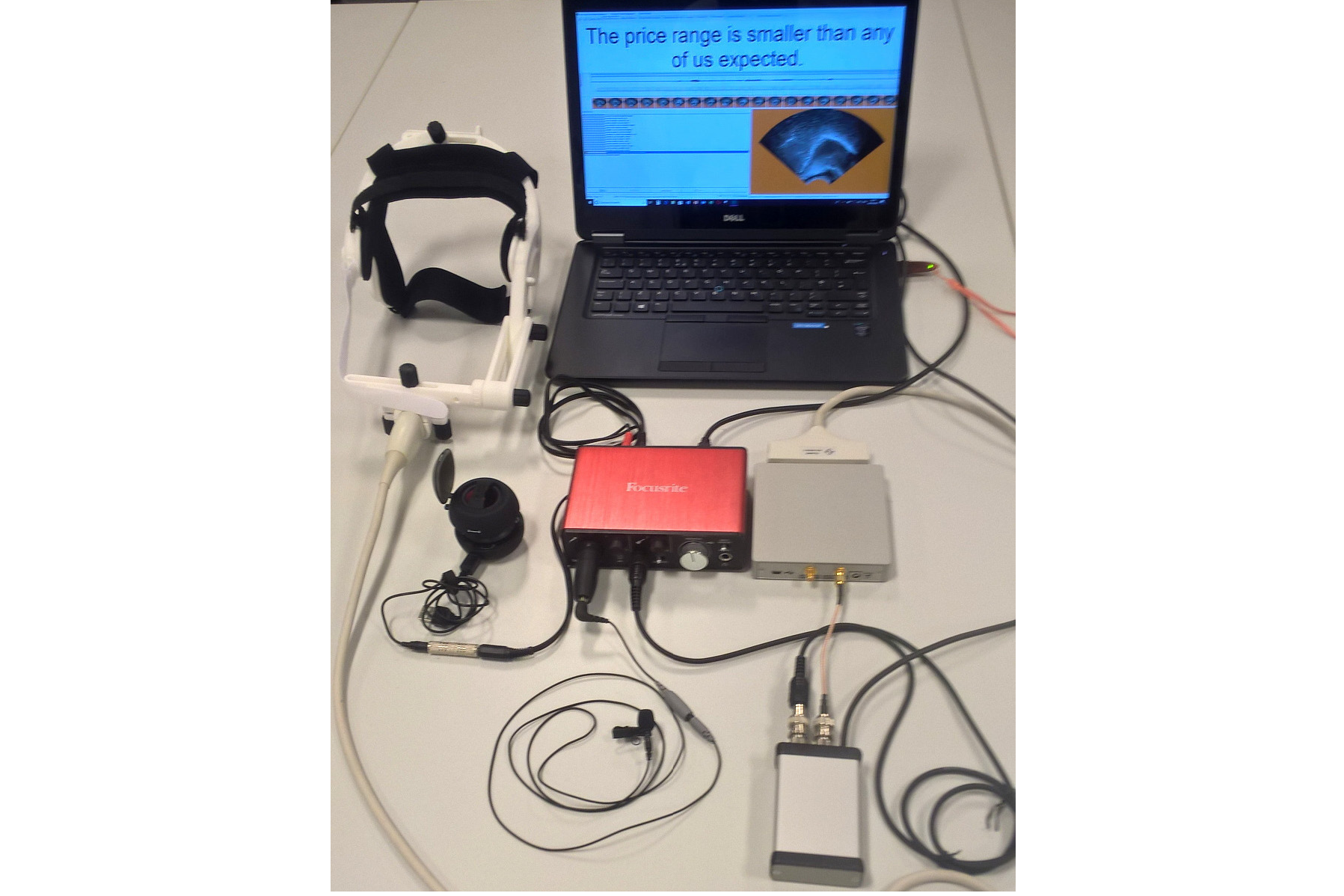

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

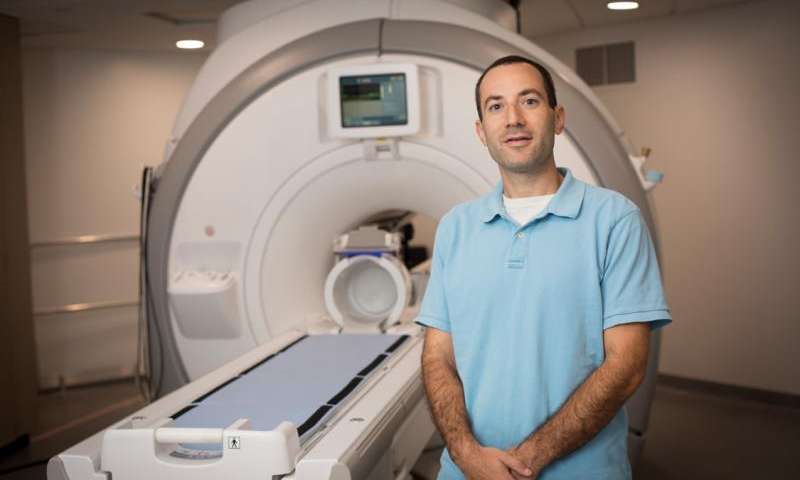

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.