About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

20th September 2024 01:00 PM

Linguistics Syntax Circle Speaker: John Frederick Bailyn

The Department of Linguistics Syntax Circle proudly presents Dr. John Bailyn, Professor at Stony Brook University (Ph.D. 1995, Cornell University.

Dr. Bailyn will speak on "Motivated Leapfrogging: a Slightly Cartographic Approach to Weak Islands".

Abstract:

Weak islands, such as WH-islands, well-known since Huang 1982, present three puzzles for syntactic theory.

1. a. ?Which problem do you wonder how to solve ? (?argument extraction over WH)

b. *How do you wonder which problem to solve ? (*adjunct extraction over WH)Puzzle 1: They constrain long-distance dependencies in a way standard subordination does not: (1) is worse than extraction from indicatives.

Puzzle 2: They reveal a significant contrast between arguments and adjuncts ((1a) is much better than (1b))

Puzzle 3: The argument extraction that is possible in (1)a is not entirely fine ((1a) is somewhat degraded)

The traditional story for Puzzle 1 is the need for successive cyclicity (Spec CP is unavailable). This tradition is maintained in minimalism by the requirement that escaping a phase must proceed through its edge, which in this case is occupied. For Puzzle 2, the traditional story concerns a looser condition on argument extraction than adjunct extraction, which cannot easily be maintained without the ECP (now unavailable). Puzzle 3 is essentially unexplained in previous accounts other than to stipulate that Subjacency violations are mild

In this talk, I reexamine extraction from wh-islands, and show that all three puzzles (and a fourth that we will acquire along the way) lead us to a theory that involves "Leapfrogging" (in the spirit of Zeijlstra & Keine), a way of bypassing Relativized Minimality, without which no element can escape weak-islands. The overall picture will sharpen our understanding of long distance dependencies and cast doubt on the need for successive cyclicity and, ultimately, for phase theory.

Bio:

John Frederick Bailyn's research involves investigations of the workings of the linguistic component of the mind, with particular attention to the Slavic languages. Within theoretical linguistics, his primary interests lie in generative syntax, especially issues of case, word order and movement. Within cognitive science, he is interested in issues of modularity, creativity, and musical perception. Within Slavic Linguistics, he is interested in Russian Syntax, Morphology, and Phonology, comparative Slavic syntax, and historical linguistics.

JFB is also the co-Director of the NY-St. Petersburg Institute of Linguistics, Cognition and Culture (NYI), St. Petersburg, founded in 2003 an entering its 18th year in 2020.

Location: 106 Morill Hall, Cornell University Dept, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

23rd September 2024 12:20 PM

Phonetics Lab Meeting

Jennifer will give a tutorial on MaxEnt - a maximum entropy framework for estimating probability distributions.

Programs using MaxEnt models have reached (or are) the state of the art on tasks like part of speech tagging, sentence detection, prepositional phrase attachment, and named entity recognition.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

24th September 2024 12:00 PM

Simon Roessig Watch Party

We'll meet in B11 to watch P-Lab alumnus Dr. Simon Roessig talk about Syntagmatic Prominence Relations in Prosodic Focus Marking.

The YouTube link for Simon's talk is: https://www.youtube.com/watch?v=YUE9pRbq9w0

--------------------------------------------------------------------------------

Simon is giving this talk through the Speech Prosody Special Interest Group's Lecture Series, which aims to:

(1) Offer to the Speech Prosody community a well-covered view of themes and methods in speech prosody;

(2) Introduce new perspectives and foster debate;

(3) Stimulate collaborations among speech prosody researchers, including by making known to the community the existence of public repositories with data, corpora, joint projects asking for collaboration and other resources that can be freely shared.

Lectures will be presented live in YouTube, with Q&A, handled through the YouTube's chat feature.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

25th September 2024 12:20 PM

PhonDAWG - Phonetics Lab Data Analysis Working Group

Dr. Maya Abtahian (University of Rochester) will give a zoom talk on language shift, variation, and change.

Then Abby and Maya will present a background questionnaire for an upcoming language heritage paper.

Location: B11 Morrill Hall, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

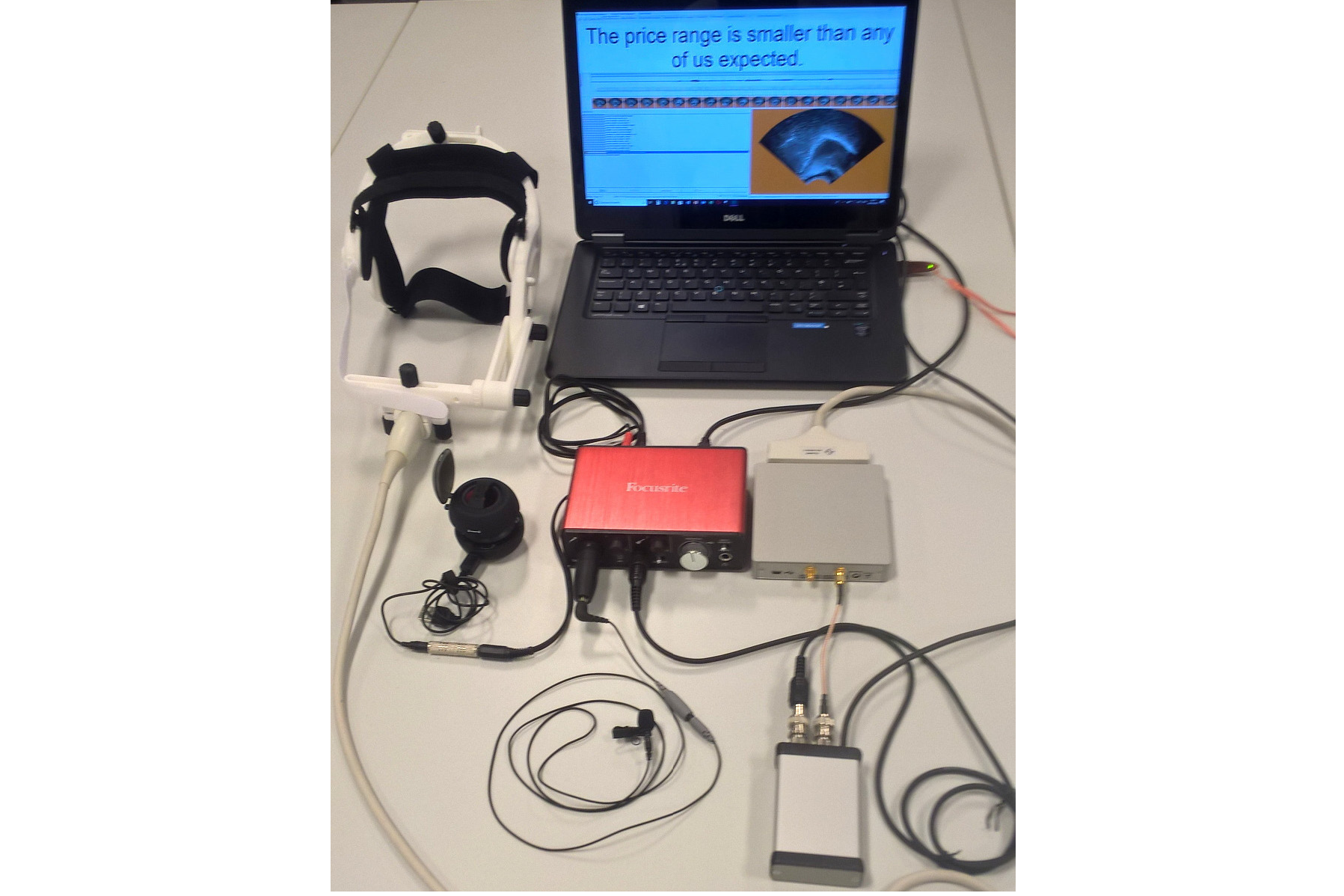

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

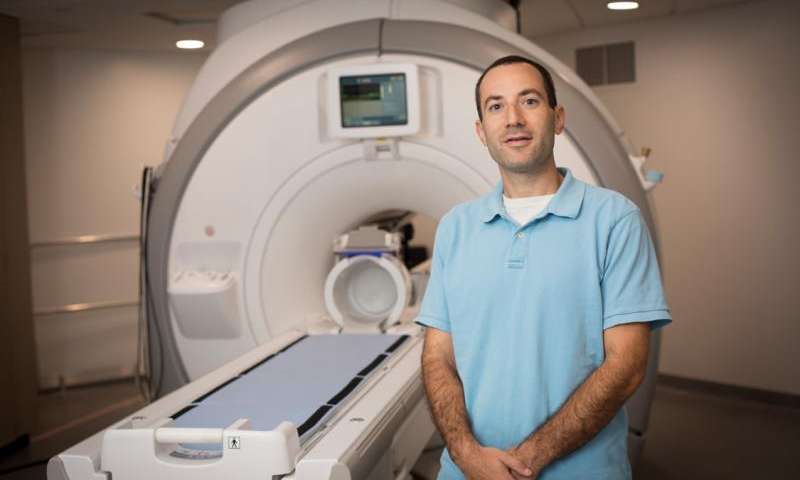

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.