About

The Cornell Phonetics Lab is a group of students and faculty who are curious about speech. We study patterns in speech — in both movement and sound. We do a variety research — experiments, fieldwork, and corpus studies. We test theories and build models of the mechanisms that create patterns. Learn more about our Research. See below for information on our events and our facilities.

Upcoming Events

3rd October 2024 04:30 PM

Linguistics Colloquium Speaker: Dr. Molly Babel to lecture on "What's in a voice? Language-specific and language-general phonetics"

The Department of Linguistics proudly presents Dr. Molly Babel, Professor of Linguistics at the University of British Columbia and director of the University's Speech in Context Lab.

Dr. Babel will give a talk titled: What's in a voice? Language-specific and language-general phonetics

A light reception with the speaker to follow.

Abstract:

Voices are rich with biological, social, and linguistic signatures all baked into the same signal. Bilingual voices are particularly interesting because when speaking one language or the other, a talker changes the linguistic and, often, social packaging, but an individual’s vocal tract anatomy remains constant.

In this talk, I share our work on the acoustic structures across languages in Cantonese-English bilingual voices, which demonstrates that Cantonese-English bilingual voices are highly similar across languages (Johnson & Babel, 2023).

This would suggest that it would be easy to track a bilingual’s identity across languages, which we know empirically not to be the case. Armed, however, with the knowledge that there is substantial information shared across a bilingual’s languages, we test listeners from four language backgrounds, to assess whether group-level differences in voice learning are due (i) to varying abilities to access the relative acoustic-phonetic features that distinguish a voice, (ii) to learn at a given rate, or (iii) to generalize learning of talker-voice associations to novel same-language and different-language utterances.

Differences in performance across listener groups show evidence in support of the language familiarity effect and of the role of two mechanisms for voice learning: the extraction and association of talker-specific, language-general information that can be more robustly generalized across languages, and talker-specific, language-specific information that may be more readily accessible and learnable, but due to its language-specific nature, is less able to be extended to another language.

Bio:

Dr. Babel is interested in speech perception and production, and there is a strong theme of cross-linguistic and cross-dialectal inquiry in her work.

More specifically, her research program focuses on the role of experience and exposure to phonetic and phonological knowledge, how social knowledge may be manifested phonetically, and the mental representation of phonetic and phonological knowledge.

A significant portion of her work explores how interacting language systems influence one another on a phonetic level. She has investigated this within bilingual speakers (English and Northern Paiute), across dialects (Australian and New Zealand Englishes), and within dialects (American English).

She has also have a strong interest in language documentation and description; with colleagues at University of California, Berkeley, she conducted fieldwork on Northern Paiute (Numic; Uto-Aztecan).

Location: Morrill Hall, 106 Cornell University Dept, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

3rd October 2024 06:00 PM

ASL Performance Series presents Jeremy Lee Stone in "ASL is ART"

The Department of Linguistics and ASL Program proudly presents Jeremy Lee Stone, from ASL NYC.

Jeremy will perform "ASL is ART", where he will explore the history and evolution of ASL poetry, highlighting key figures like Clayton Valli. He will perform his ABC story “The Matrix” and the ASL poem “Glitch,” showcasing storytelling techniques such as Visual Vernacular. Audience members will engage in interactive exercises, including an Alphabet story and a slow-motion group activity. Jeremy will also encourage incorporating ASL into personal expressions and interests.

The session concludes with a Q&A.

ASL/English interpretation will be provided.

Bio:

Jeremy Lee Stone, a native of Harlem, New York, was born Deaf, and from that silence, he has amplified a powerful message. Fluent in American Sign Language, he is on a mission to showcase ASL as the language of the future, proving that the future is here and now. Recognizing the need for broader understanding and acceptance, Jeremy founded ASL NYC, a groundbreaking ASL class.

Venturing into the entertainment industry, he has become a sought-after ASL Consultant. Through his work, he aims to redefine perceptions of deafness and seamlessly integrate it into mainstream consciousness. Beyond his professional endeavors, Jeremy finds joy in crafting stories through the fluid motions of his hands, bringing his visions to life in a captivating tapestry of expression.

Location: Room 165, McGraw Hall, Cornell University, Ithaca, NY 148534th October 2024 11:15 AM

C.Psyd - Beyond Projecting: Nouns’ and Modifiers’ Influence on Protorole Properties

This week in C.Psyd, Zander and Lucas will present some of their ongoing work.

Their presentation is titled: Projecting: Nouns’ and Modifiers’ Influence on Protorole Properties

Abstract:It has long been thought that verbs are the primary focus of events due to linguistic theories which hypothesize that thematic roles are projected from the verb onto its arguments.

For example, Dowty (1991) claimed that verbs entail a set of characteristics onto their arguments, which can be categorized as either proto-agent (eg. awareness, instigation, sentience) or proto-patient (eg. change of state, change of location, manipulated). However, Husband (2023) argues for thematic separation, in which arguments may be given thematic roles, or alternatively Dowty’s protoroles, prior to reading the verb.

In this project, we investigate the role of nouns and adjectives in assigning and modifying role features previously theorized to be assigned only by verbal predicates. We probe computational models trained to predict proto-role properties to show that LLMs largely attend to the arguments themselves, especially for subjects.

Subsequently we used WordNet to identify the features of nouns that are semantically meaningful for assigning proto-role properties. As well as looking at nouns we also looked into adjectives as a means of assigning roles to the arguments of a sentence through experimental methods of annotation and judgment collection.

These preliminary results indicate that roles are not solely given out by the verb but rather by some combination of the contentful words in a sentence.

Location: B07 Morrill Hall, 106 Cornell University Dept, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

4th October 2024 12:20 PM

Informal Talk by Molly Babel: The own-voice benefit in word recognition

Dr. Molly Babel will give an informal talk titled: The own-voice benefit in word recognition

Abstract:

There is a well-documented familiarity benefit in spoken word recognition and sentence processing. Simply, listeners show improved performance when presented with familiar languages, accents, and individuals. How does an individual’s own voice factor into the familiarity boost?

In this talk, I present the evidence for an own-voice benefit in word recognition in a population of early bilinguals, extending recent work demonstrating an own-voice benefit for late L2 learners (Eger & Reinisch, 2019).

The results suggest that the phonetic distributions that undergird phonological contrasts are heavily shaped by one’s own phonetic realization. I’ll discuss the implications of this for our understanding of phonetic knowledge

Location: Morrill Hall, 106 Cornell University Dept, 159 Central Avenue, Morrill Hall, Ithaca, NY 14853-4701, USA

Facilities

The Cornell Phonetics Laboratory (CPL) provides an integrated environment for the experimental study of speech and language, including its production, perception, and acquisition.

Located in Morrill Hall, the laboratory consists of six adjacent rooms and covers about 1,600 square feet. Its facilities include a variety of hardware and software for analyzing and editing speech, for running experiments, for synthesizing speech, and for developing and testing phonetic, phonological, and psycholinguistic models.

Web-Based Phonetics and Phonology Experiments with LabVanced

The Phonetics Lab licenses the LabVanced software for designing and conducting web-based experiments.

Labvanced has particular value for phonetics and phonology experiments because of its:

- *Flexible audio/video recording capabilities and online eye-tracking.

- *Presentation of any kind of stimuli, including audio and video

- *Highly accurate response time measurement

- *Researchers can interactively build experiments with LabVanced's graphical task builder, without having to write any code.

Students and Faculty are currently using LabVanced to design web experiments involving eye-tracking, audio recording, and perception studies.

Subjects are recruited via several online systems:

- * Prolific and Amazon Mechanical Turk - subjects for web-based experiments.

- * Sona Systems - Cornell subjects for for LabVanced experiments conducted in the Phonetics Lab's Sound Booth

Computing Resources

The Phonetics Lab maintains two Linux servers that are located in the Rhodes Hall server farm:

- Lingual - This Ubuntu Linux web server hosts the Phonetics Lab Drupal websites, along with a number of event and faculty/grad student HTML/CSS websites.

- Uvular - This Ubuntu Linux dual-processor, 24-core, two GPU server is the computational workhorse for the Phonetics lab, and is primarily used for deep-learning projects.

In addition to the Phonetics Lab servers, students can request access to additional computing resources of the Computational Linguistics lab:

- *Badjak - a Linux GPU-based compute server with eight NVIDIA GeForce RTX 2080Ti GPUs

- *Compute server #2 - a Linux GPU-based compute server with eight NVIDIA A5000 GPUs

- *Oelek - a Linux NFS storage server that supports Badjak.

These servers, in turn, are nodes in the G2 Computing Cluster, which currently consists of 195 servers (82 CPU-only servers and 113 GPU servers) consisting of ~7400 CPU cores and 698 GPUs.

The G2 Cluster uses the SLURM Workload Manager for submitting batch jobs that can run on any available server or GPU on any cluster node.

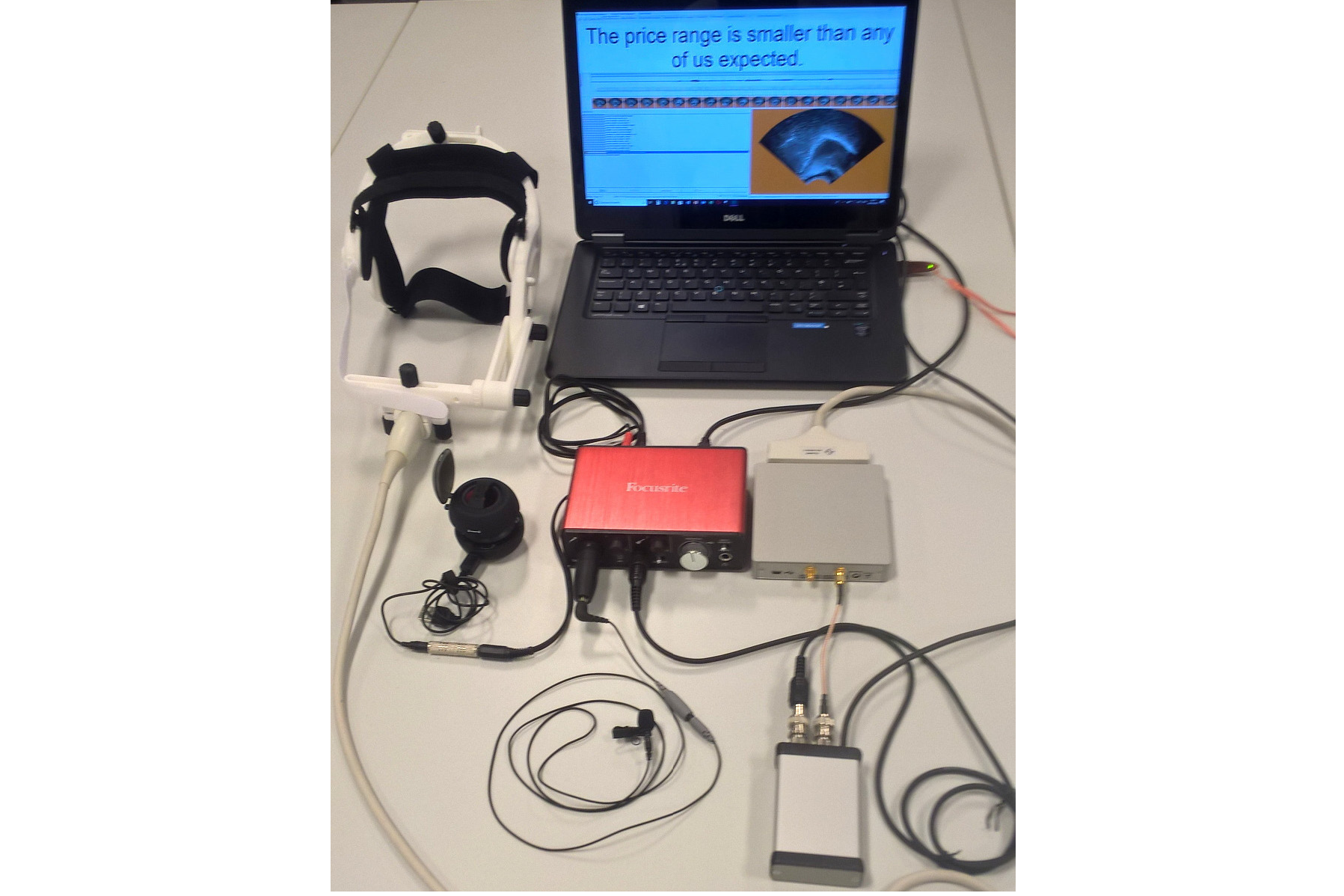

Articulate Instruments - Micro Speech Research Ultrasound System

We use this Articulate Instruments Micro Speech Research Ultrasound System to investigate how fine-grained variation in speech articulation connects to phonological structure.

The ultrasound system is portable and non-invasive, making it ideal for collecting articulatory data in the field.

BIOPAC MP-160 System

The Sound Booth Laboratory has a BIOPAC MP-160 system for physiological data collection. This system supports two BIOPAC Respiratory Effort Transducers and their associated interface modules.

Language Corpora

- The Cornell Linguistics Department has more than 915 language corpora from the Linguistic Data Consortium (LDC), consisting of high-quality text, audio, and video corpora in more than 60 languages. In addition, we receive three to four new language corpora per month under an LDC license maintained by the Cornell Library.

- This Linguistic Department web page lists all our holdings, as well as our licensed non-LDC corpora.

- These and other corpora are available to Cornell students, staff, faculty, post-docs, and visiting scholars for research in the broad area of "natural language processing", which of course includes all ongoing Phonetics Lab research activities.

- This Confluence wiki page - only available to Cornell faculty & students - outlines the corpora access procedures for faculty supervised research.

Speech Aerodynamics

Studies of the aerodynamics of speech production are conducted with our Glottal Enterprises oral and nasal airflow and pressure transducers.

Electroglottography

We use a Glottal Enterprises EG-2 electroglottograph for noninvasive measurement of vocal fold vibration.

Real-time vocal tract MRI

Our lab is part of the Cornell Speech Imaging Group (SIG), a cross-disciplinary team of researchers using real-time magnetic resonance imaging to study the dynamics of speech articulation.

Articulatory movement tracking

We use the Northern Digital Inc. Wave motion-capture system to study speech articulatory patterns and motor control.

Sound Booth

Our isolated sound recording booth serves a range of purposes--from basic recording to perceptual, psycholinguistic, and ultrasonic experimentation.

We also have the necessary software and audio interfaces to perform low latency real-time auditory feedback experiments via MATLAB and Audapter.