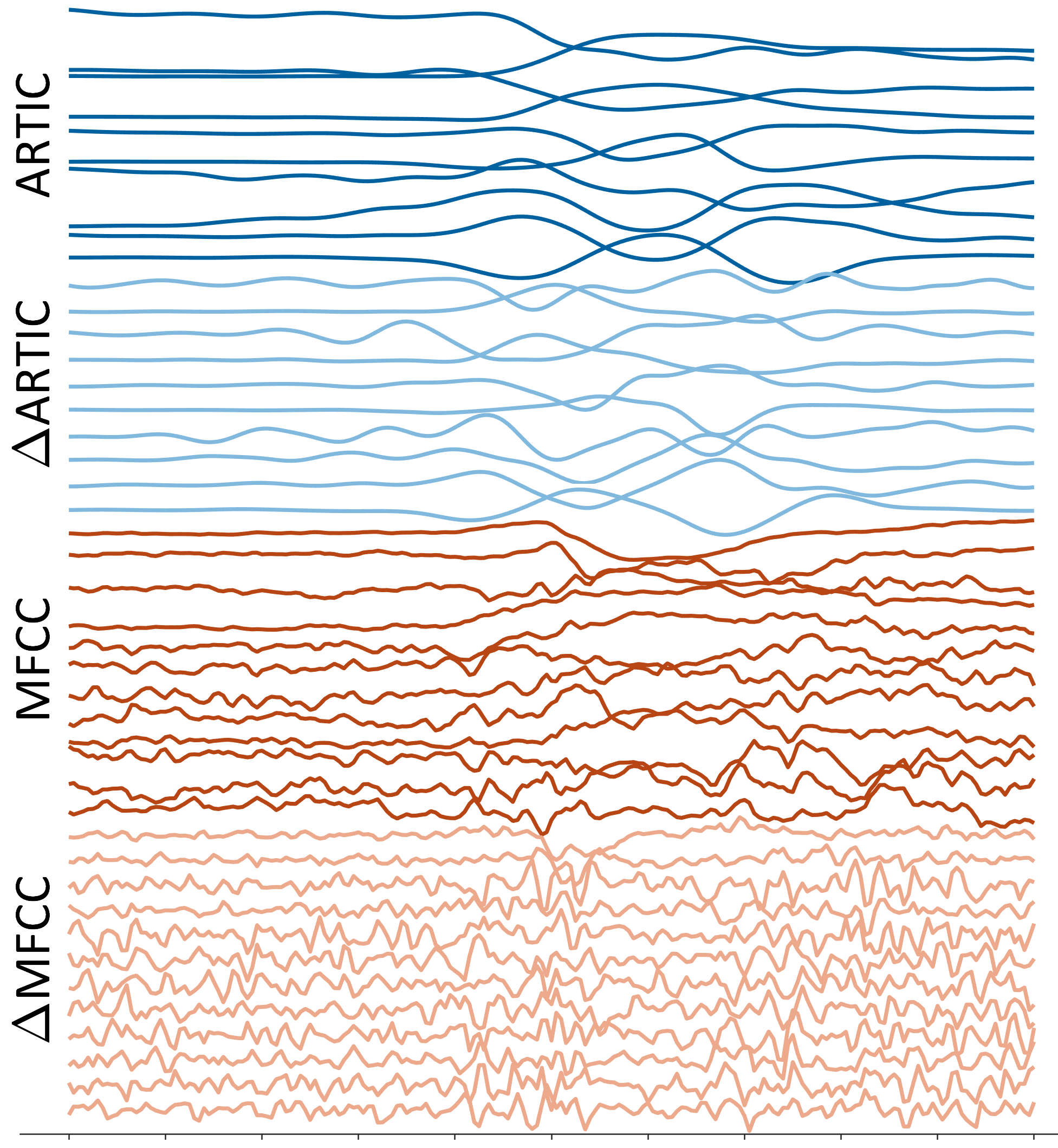

The speech system and our measurements of its outputs—articulator positions and acoustic signals—are very high-dimensional. In contrast, our theories posit low-dimensional categories like phonemes and gestures. How do we determine, from articulatory and acoustic signals, where in time there is evidence for those categories, and which signal dimensions contain that information? This project uses deep neural networks to estimate the temporal and spatial distributions of category-related information.

Research

Localizing speech categories in space and time